(This post appeared on our successor blog, The Analysights Data Mine, on Friday, May 9, 2014).

As data continues to proliferate unabated in organizations, coming in faster and from more sources each day, decision makers find themselves perplexed. Decision makers struggle with several questions: How much data do we have? How fast is it coming in? Where is it coming from? What form does it take? How reliable is it? Is it correct? How long will it be useful? And this is before they even decide what they can and will do with the data!

Before a company can leverage big data successfully, it must decide upon its objectives and balance that against the data it has, regulations for the use of that data, and the information needs of all its functional areas. And it must assess the risks both to the security of the data and to the company’s viability. That is, the company must establish effective data governance.

What is Data Governance?

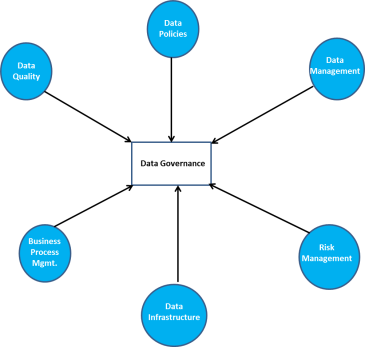

Data governance is a young and still evolving system of practices designed to help organizations ensure that their data is managed properly and in the best interest of the organization and its stakeholders. Data governance is an organization’s process for handling data by leveraging its data infrastructure, the quality and management of its data, its policies for using its data, its business process needs, and its risk management needs. An illustration of data governance is shown below:

Why Data Governance?

Data has many uses; comes in many different forms; takes up a lot of space; can be siloed, subject to certain regulations, off-limits to some parties but free and unlimited to others; and must be validated and safeguarded. But just as much, data governance ensures that the business is using its data toward solving its defined business problems.

The explosion of regulations, such as Sarbanes-Oxley, Basel I, Basel II, Dodd-Frank, HIPAA, and a series of other rules regarding data privacy and security are making the role of data governance all the more important.

Moreover, data comes in many different forms. Companies get sales data from the field, or from a store location; they get information about their employees from job applications. Data of this nature is often structured. Companies also get data from their web logs, from social media such as Facebook and Twitter; they also get data in the form of images, text, and so forth; these data are unstructured, but must be managed regardless. Through data governance, the company can decide what data to store and whether it has the infrastructure in place to store it.

The 6 Vs of Big Data

Many people aware of big data are familiar with its proverbial “3 Vs” – Volume, Variety, and Velocity. But Kevin Normandeau, in a post for Inside Big Data, suggests that three more Vs pose even greater issues: Veracity (cleanliness of the data), Validity (correctness and accuracy of the data), and Volatility (how long the data remains valid and should be stored). These additional Vs make data governance an even greater necessity.

What Does Effective Data Governance Look Like?

Effective data governance begins with designation of an owner for the governance effort – an individual or team who will be held accountable.

The person or team owning the data governance function must be able to communicate with all department heads to understand the data they have access to, what they use it for, where they store it, and what they need it for. They must also be adroit in their ability to work with third party vendors and external customers of their data.

The data governance team must understand both internal policies and external regulations governing the use of data and what specific data is subject to specific regulations and/or policies.

The data governance team must also assess the value of the data the company collects; estimate the risks involved if a company makes decisions based on invalid or incomplete data, or if the data infrastructure fails, or is hacked; and design systems to minimize these risks.

The team must then be able to draft, document, implement, and enforce its governance processes once data has been inventoried and matched to its relevant constraints, and the team develops its processes for data collection and storage. The team must then be able to train employees of the organization in the proper use and collection of the data, so that they know what they can and cannot do.

Without effective data governance, companies will find themselves vulnerable to hackers, fines, or other business interruptions; they will be less efficient as inaccurate data will lead to rework and inadequate data will lead to slower, less effective decision making; and they will be less profitable as lost data or incomplete data will often cause them to miss opportunities or take incorrect actions due to decisions on such data. Good data governance will ensure that companies get the most out of their data.

****************************************************

Follow Analysights on Facebook and Twitter!

Now you can find out about new posts to both Insight Central and our successor blog, The Analysights Data Mine, by “Liking” us on Facebook (just look for Analysights), or by following @Analysights on Twitter. Each time a new post appears on Insight Central or The Analysights Data Mine, you will be notified by either your Facebook Newsfeed or your Twitter feeds. Thanks!